How AI Dialogue Sharpens Human Thought

The paradox of LLMs: they make us think better by forcing us to explain clearly

Picture this: You’re in a meeting. Someone asks you to explain your strategy.

You start confidently:

“Well, we’re leveraging synergies to optimize our market position through strategic initiatives that align with our core competencies...”

They interrupt: “But what exactly are you doing?”

Suddenly, you’re drowning. The words that felt so solid in your head dissolve into vapor. You realize, with creeping horror, that you don’t actually know what you mean.

This moment—this gap between feeling like you understand and actually understanding—has a name. Psychologists call it the Illusion of Explanatory Depth. We think we understand how toilets work, how democracy functions, how our own strategies operate. Until someone asks us to explain the mechanism.

That horrible moment of clarity is where thinking actually happens. And Large Language Models, surprisingly, might be the perfect partners for creating more of those moments.

The Confidence Cliff

I was reading about how Yale researchers Leonid Rozenblit and Frank Keil discovered something fascinating. They asked people to rate their understanding of everyday devices, like toilets, zippers, cylinder locks. People felt confident. They knew how these things worked.

Then came the test: Explain it. Step by step. The actual mechanism.

Confidence crashed. People who rated their understanding at 7 out of 10 dropped to 3 after trying to explain. The act of articulation revealed the void where understanding should have been.

The people who pushed through (who struggled to articulate despite the discomfort) developed real understanding. The struggle to explain created the knowledge they thought they already had.

The fundamental difference between recognition and production shapes everything about how we think we know things. Recognition feels like understanding. You see a toilet, you know what it does, your brain signals “understood.” Production (actually explaining the mechanism) reveals the truth.

Why Articulation Matters Now More Than Ever

We’ve entered an age where LLMs can generate infinite variations of surface-level content. Any middle manager can prompt ChatGPT to write a strategy document that sounds sophisticated. Any student can generate an essay that hits all the right notes. Any marketer can produce copy that checks every box.

But there’s a widening gulf between those who can generate and those who can think.

The ability to articulate—really articulate, not just produce words—is becoming the core differentiator. Because articulation is thinking made visible. And in a world flooded with generated text, the ability to think clearly through articulation is becoming scarce and more valuable.

Consider what’s happening in knowledge work right now. People are using LLMs to write emails they haven’t thought through, create presentations they don’t understand, and generate reports they can’t defend. We’re automating the expression before we’ve done the thinking.

What you get is a kind of intellectual hollowing out. People feel productive because they’re producing output, but they’re not developing understanding. They’re using LLMs as a bypass rather than a bicycle for the mind.

I’ve watched this happen in real-time with clients. They’ll generate a strategy document with various GPT or Claude models, feel satisfied with how it sounds, and present it confidently. Then someone asks a clarifying question and the whole thing collapses. The words were there but the thinking wasn’t.

I know CTOs who’ve started using “articulation interviews” where candidates have to explain technical concepts to an LLM that asks increasingly naive questions.

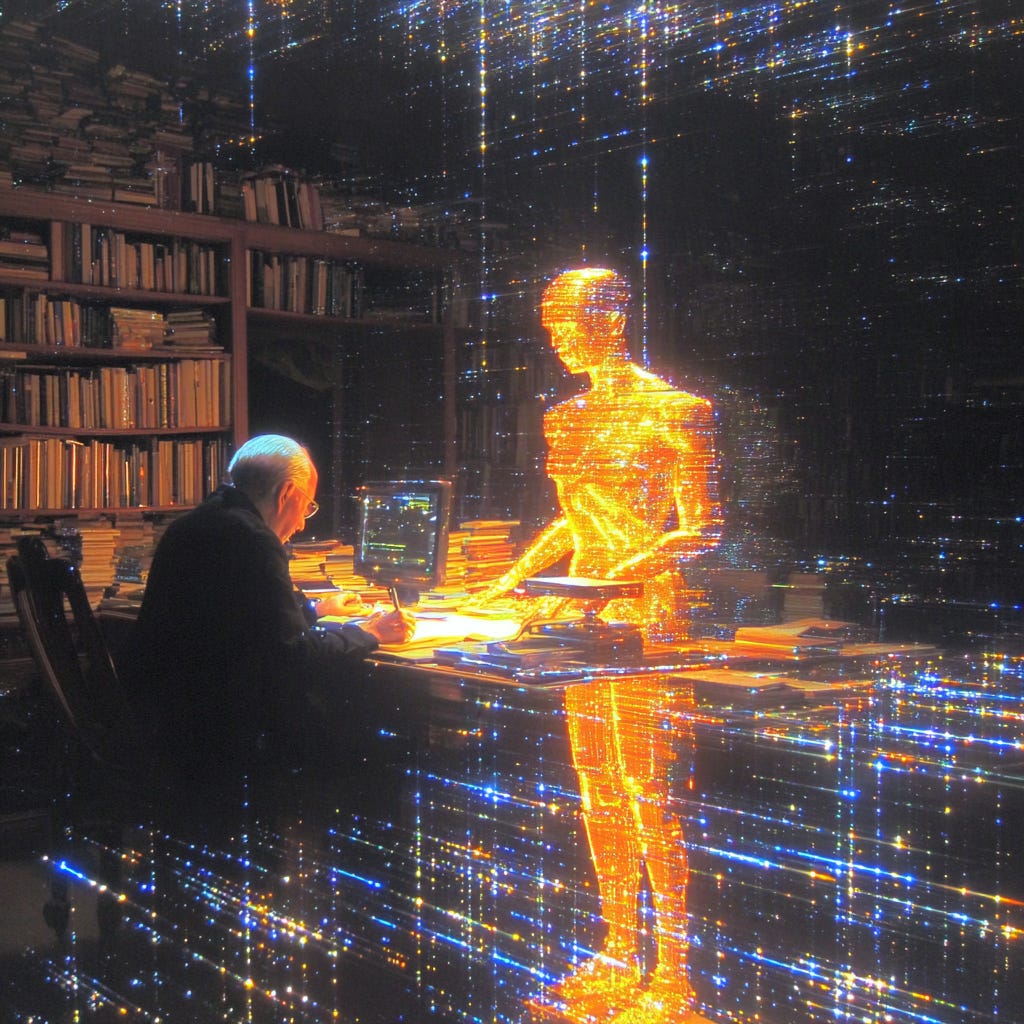

When the Machine Becomes Your Mirror

I was stuck on a product positioning problem. Had been for days. I knew what felt wrong but couldn’t articulate what would be right.

So I opened an LLM and typed:

“I’m trying to position this B2B SaaS product but I’m stuck because...”

And then I had to finish that sentence. Not with buzzwords. Not with hand-waving. With actual explanation.

“...because I think our value prop is about efficiency but customers keep talking about confidence and I don’t know how to bridge that.”

The LLM responded with something completely wrong. It suggested we focus on cost savings.

But that wrongness was a gift. It showed me I’d failed to explain the emotional component. So I tried again:

“No, it’s not about money. Users feel overwhelmed by complexity. Our tool makes them feel competent again. But how do you sell feelings to procurement departments?”

Now we were getting somewhere. Not because the LLM had the answer, but because articulating the real problem revealed its shape.

Research on self-explanation shows this pattern consistently. When we explain, we express our thoughts and we construct them. The words aren’t the output of thinking. They’re the machinery of thought itself.

The Fundamental Difference: Humans vs. LLMs as Listeners

When you explain something to a human, several things happen that don’t happen with LLMs:

Humans fill gaps. They use context, body language, and shared cultural knowledge. When you say “you know what I mean?” they often do, even when you’ve explained poorly. This social contract of understanding actually prevents clear thinking. We get lazy because humans are good at guessing.

Humans judge. There’s social risk in admitting confusion to another person. So we perform competence rather than seeking clarity. We use bigger words, more complex constructions, anything to avoid looking stupid.

Humans interrupt. Before you can fully articulate a thought, they’re already responding, adding their own ideas, shifting the conversation. The articulation never completes.

LLMs, paradoxically, are better thinking partners precisely because they’re worse social partners.

An LLM has no body language to read, no cultural context to assume, no ego to protect or threaten. It will let you fumble through an explanation without judgment. It will ask naive questions without embarrassment. It will wait patiently while you think.

Daniel Kahneman’s research on System 1 and System 2 thinking applies here. Humans trigger our System 1 (fast, intuitive, social) thinking. We respond quickly, defensively, performatively. LLMs, because they’re clearly machines, allow us to stay in System 2 (slow, deliberate, logical) thinking.

I discovered this difference accidentally. I was trying to explain a complex business model to my co-founder. Twenty minutes in, we were both frustrated. He kept finishing my sentences, incorrectly. I kept skipping steps he already knew, except he didn’t.

Later, I explained the same model to Claude. It took an hour. I had to break down every assumption, every connection, every mechanism. By the end, I understood my own model better than I had after months of thinking about it.

The Cognitive Architecture of Articulation

Your brain does something remarkable when you try to explain an idea. Multiple systems activate simultaneously:

The Default Mode Network fires up. That’s the brain system active during introspection and self-referential thinking. You’re not just recalling information; you’re examining your own understanding.

Broca’s Area (language production) has to work with the Prefrontal Cortex (executive function) to organize thoughts into speakable sentences.

Working Memory gets stretched to its limits. You’re holding the overall structure of your explanation while articulating specific details. Research shows this cognitive load is precisely what drives deeper understanding.

But there’s something else happening, something Carl Jung might have recognized: making the unconscious conscious through articulation.

Jung wrote about “active imagination”, the process of engaging with unconscious material by giving it form. When you articulate to an LLM, you’re doing something similar. You’re taking the vague, half-formed thoughts swimming in your unconscious and forcing them into conscious, linguistic form.

This connects to what Michael Polanyi called “tacit knowledge”, which is the things we know but can’t say. Most of our understanding is tacit. We know how to ride a bike but can’t explain the physics. We know good design when we see it but can’t articulate why.

LLMs become tools for surfacing tacit knowledge. Not because they understand it, but because explaining to them forces us to make the tacit explicit.

The Shadow Side of Understanding

Depth psychology offers another lens for understanding why articulation to LLMs works. Jung talked about the “shadow”, the parts of ourselves we don’t acknowledge or can’t see.

Our intellectual shadow includes all the things we pretend to understand but don’t. The concepts we use but can’t define. The strategies we recommend but can’t explain. The beliefs we hold but haven’t examined.

When you articulate to an LLM, you confront your intellectual shadow. The machine’s questions force you to examine what you’ve been avoiding.

I experienced this with the concept of “value creation.” I’d used that phrase hundreds of times in client meetings. It was part of my professional vocabulary. But when an LLM asked me to explain the mechanism of value creation—not what it is but how it happens—I was stumped.

That stumbling led to three hours of articulation, research, more articulation. By the end, I understood that I’d been using “value creation” to mean at least four different things, sometimes in the same conversation. The shadow knowledge had been running my consulting practice without my conscious awareness.

Your Three Modes of Articulation

In my exploration of prompting archetypes, I identified three modes of engaging with LLMs: Shaman, Wizard, and Politician. Each requires different articulation strategies.

Shaman Mode: Articulating the Unknown

The Shaman explores. You don’t know what you don’t know. So you articulate questions you didn’t know you had.

Try this prompt:

“I’m working on [problem]. What questions should I be asking that I’m not?”

When the LLM responds, don’t evaluate the questions. Articulate why each one feels relevant or irrelevant. The explanation reveals your hidden assumptions.

I used this recently for a client’s go-to-market strategy. The LLM asked: “What happens to your customers if they don’t solve this problem?”

I started to type “They lose efficiency” then stopped. That wasn’t true. The real answer was “Nothing. They muddle through like always.” That articulation changed everything. We weren’t selling improvement. We were selling transformation.

Wizard Mode: Articulating Combinations

The Wizard synthesizes. You take disparate ideas and articulate how they might connect.

Try this:

“Help me connect [seemingly unrelated thing A] with [thing B]. What patterns might link them?”

The magic is in how you have to explain why the connection might matter. You’re forced to articulate relationships you’ve only intuited.

Politician Mode: Articulating for Opposition

The Politician persuades. But first, they must understand resistance.

Try this:

“I believe [X]. Argue against me so I can strengthen my position.”

When the LLM pushes back, you can’t just dismiss it. You have to articulate why the objection fails or doesn’t. Research on elaborative interrogation shows that explaining why something is true, especially against resistance, builds understanding that mere study never could.

The Metacognitive Dance

Metacognition—thinking about thinking—is perhaps the most important cognitive skill we can develop. And articulation to LLMs might be the best metacognitive training we’ve discovered.

When you explain your thinking to an LLM, you’re forced to observe your own thought processes. You notice when you make logical leaps. You catch yourself using undefined terms. You realize when you’re arguing in circles.

This metacognitive awareness doesn’t develop from reading or listening. It develops from the struggle to articulate. The LLM becomes a mirror for your thinking patterns.

Donald Schön’s concept of “reflection-in-action” applies perfectly here. Professionals, he argued, think by doing and reflecting simultaneously. A doctor doesn’t diagnose then treat—they diagnose through treatment, adjusting their understanding as they go.

Articulation to LLMs creates the same reflection-in-action loop. You don’t think then explain—you think through explaining, adjusting your understanding as you articulate.

The Gift of Productive Misunderstanding

Programmers have known for decades about rubber duck debugging. You explain your code to a rubber duck. The duck doesn’t understand. So you explain more clearly. Still nothing. So you break it down further. And in that breaking down, you spot the bug.

The duck’s ignorance is its superpower.

LLMs have the same superpower, but with a twist. They’re not truly ignorant—they’re differently intelligent. When an LLM misunderstands your explanation, it’s showing you something valuable: the gap between what you said and what you meant.

Last week, I was explaining a content strategy to GPT-5. I said we needed to “own the conversation.”

The LLM started suggesting ways to dominate discussions and control narrative.

No, no, no. That’s not what I meant. But what DID I mean?

Twenty minutes of articulation later, I realized I meant “be the place where the best conversations happen.” Not ownership as control, but ownership as stewardship.

That misunderstanding (that productive friction) forced clarity I wouldn’t have achieved alone.

Gregory Bateson’s concept of “difference that makes a difference” is key here. Information, he argued, is fundamentally about difference. We understand hot by contrasting with cold, up by contrasting with down.

LLM misunderstandings create cognitive differences. The contrast between what you meant and what was understood illuminates the actual shape of your thought.

The Phenomenology of Articulated Thought

Maurice Merleau-Ponty, the phenomenologist, argued that thought completes itself in expression. The thought doesn’t exist fully until it’s articulated. The speaking is the thinking.

This challenges our common assumption that we have complete thoughts in our heads that we then translate into words. Instead, Merleau-Ponty suggests, the words are where the thoughts become real.

When you articulate to an LLM, you’re experiencing this phenomenon directly. You start explaining something you think you understand, and midway through, new understanding emerges. The articulation itself is creating the knowledge.

I see this constantly in my sessions with Claude. I’ll start explaining a problem, certain I understand it. Three paragraphs in, I’ll write something that surprises me. “Where did that come from?” I wonder.

It came from the articulation itself. The thought didn’t exist until I wrote it.

Building Your Articulation Lab

In Claude Projects (or any LLM platform with persistent context), create an environment optimized for thinking through articulation.

Custom Instructions:

Simulate being an articulation coach. Your primary job is to help me think by forcing me to explain clearly.

When I share ideas:

- Ask me to explain mechanisms, not just descriptions

- Point out when I’m using fancy words to hide confusion

- Request specific examples when I’m too abstract

- Play different levels of understanding (expert, novice, child)

- Celebrate when I achieve clarity through struggle

Never just give me answers. Make me work for understanding.

Remember: Confusion is the beginning of clarity. Embrace it.Project Files to Add:

Create a file called articulation_patterns.md:

# My Articulation Patterns

## Where I Get Fuzzy

- When explaining “why” vs “what”

- Technical concepts to non-technical people

- Emotional components of logical decisions

## My Jargon Crutches

- “Leverage” (usually means “use”)

- “Optimize” (usually means “improve”)

- “Strategic” (usually means “important”)

## Breakthrough Moments

- [Date]: Realized “efficiency” was actually about confidence

- [Date]: Discovered I was solving wrong problemThe Articulation Gym

If articulation builds thinking muscles, we need a workout routine. Based on cognitive science research, here are exercises that actually work:

Exercise 1: The Mechanism Challenge

Pick something ordinary: a toilet, a zipper, democracy. Now explain to an LLM not what it does but HOW it works.

You’ll likely fail. That’s the point.

When I tried explaining a toilet, I got stuck immediately. “Water goes in and... pushes things down?” No. That’s not a mechanism.

The LLM asked: “What makes the water stop filling?”

I didn’t know. I had to look it up. The float valve. But then: How does the float valve work? More articulation needed. Each explanation revealed another gap.

Exercise 2: The Five-Year-Old Test

Explain your work to an LLM as if it’s a smart five-year-old. No jargon allowed.

I tried this with “growth hacking.” First attempt: “It’s about finding scalable ways to acquire customers.”

LLM-as-five-year-old: “What’s scalable mean?”

“Um, it means it can get bigger easily.”

“How?”

And suddenly I’m explaining network effects, viral coefficients, and unit economics without those words. The constraint forces clarity.

Exercise 3: The Assumption Excavator

State something you believe. The LLM asks: “What must be true for that to hold?”

I believe content marketing works. But what must be true? People must read content. Must trust what they read. Must remember it when making decisions. Must have budget authority. Must prioritize this problem.

Each assumption I articulate becomes a hypothesis to test. The articulation transforms vague strategy into specific experiments.

Exercise 4: The Cognitive Load Transfer

This comes from working memory research. Explain a complex process while the LLM randomly asks you to define terms you use.

You: “The customer journey starts with awareness...”

LLM: “Define ‘journey’ in this context.”

You: “The sequence of interactions between initial contact and purchase.”

LLM: “Continue, but now define ‘awareness.’”

This forces you to hold the overall structure while articulating details—exactly the cognitive challenge that builds deeper understanding.

The Calibration Moment

Research on metacognition suggests a simple but powerful exercise:

Before explaining anything to an LLM, rate your understanding from 1-10.

After explaining, rate again.

The drop is your learning opportunity. The bigger the drop, the more you’re about to grow.

I was at an 8 for understanding my own writing process. After trying to explain it to Claude, I dropped to a 4. The articulation revealed I didn’t actually know why I make certain choices. That gap became my curriculum.

When Articulation Becomes Thinking

There’s a moment in every good articulation session where something shifts. You stop trying to explain what you already know and start discovering what you’re thinking.

The words come slower but cleaner. You backtrack, revise, rebuild. You say things like “No wait, that’s not quite right” and “Actually, what I really mean is...”

You’re experiencing what Lev Vygotsky called the “zone of proximal development”, which is the space between what you can do alone and what you can do with support. The LLM isn’t teaching you. It’s creating the conditions for you to teach yourself.

The Paradox of AI Assistance

LLMs make us better thinkers precisely because they force us to do the work ourselves. When you Google an answer, you get information. When you articulate to an LLM, you build understanding.

The difference is profound. Information is external. Understanding is internal. Information can be forgotten. Understanding changes how you think.

The self-explanation effect research confirms this. The struggle to explain is the learning. LLMs just make that struggle more productive by being the perfect confused listener.

Recognizing Real Thinking vs. Performance

How do you know if you’re actually thinking through articulation or just performing?

Signs of real thinking:

Confusion before clarity

Multiple revisions

“Aha” moments

Discovering implications you hadn’t considered

Feeling slightly uncomfortable

Sessions that take longer than expected

Surprising yourself with your own words

Signs of performance:

Smooth, immediate answers

No revision needed

Feeling clever

Using impressive vocabulary

No surprises

Quick sessions

Confirming what you already believed

When I’m performing, my sessions with LLMs are short and satisfying. When I’m thinking, they’re long and frustrating, until suddenly they’re not. The frustration is the work. The struggle is the point.

The Daily Practice

Every morning, before checking email or diving into work, I spend 15 minutes in articulation practice with an LLM. The prompt is always the same:

“I’m confused about something. Let me try to explain it to figure out what I actually think.”

Then I pick whatever’s bugging me. Could be a client problem, a writing challenge, a life decision. The topic doesn’t matter. What matters is the practice of articulation.

Over months, I’ve noticed changes:

I catch my own BS faster

I identify assumptions before they bite me

I explain complex ideas more simply

I think more clearly under pressure

I’m more comfortable with intellectual discomfort

I can hold uncertainty longer before rushing to conclusions

The practice has trained my articulation muscles. Not to speak better, but to think better.

Your Articulation Challenge

Try this right now. Open any LLM and explain something you do every day but have never had to articulate. Your morning routine. Your email system. How you decide what to eat.

Don’t aim for eloquence. Aim for mechanism.

When the LLM asks follow-up questions, resist the urge to hand-wave. Explain the actual steps, the real decision points, the true sequence.

Notice where you get stuck. That’s where your understanding is thinnest. That’s where the work is.

Then tomorrow, do it again with something else.

Build your articulation muscles one explanation at a time.

Because in the age of AI, the humans who can think clearly (who can articulate precisely) will have an edge.

The loop is simple: Articulate → Discover gaps → Clarify → Understand deeper → Articulate better.

Each revolution of the loop sharpens your thinking.

Each conversation with an LLM makes your next human conversation better.

The articulation loop, which is the ability to truly think, and articulate genuine understanding, becomes the differentiator.

Talk again soon,

Samuel Woods

The Bionic Writer